What do these two memes have in common?

What do these two memes have in common?

tl;dr: Data from thousands of non-retracted articles indicate that experiments published in higher-ranking journals are less reliable than those reported in ‘lesser’ journals. Vox health reporter Julia Belluz has recently covered the reliability of peer-review.

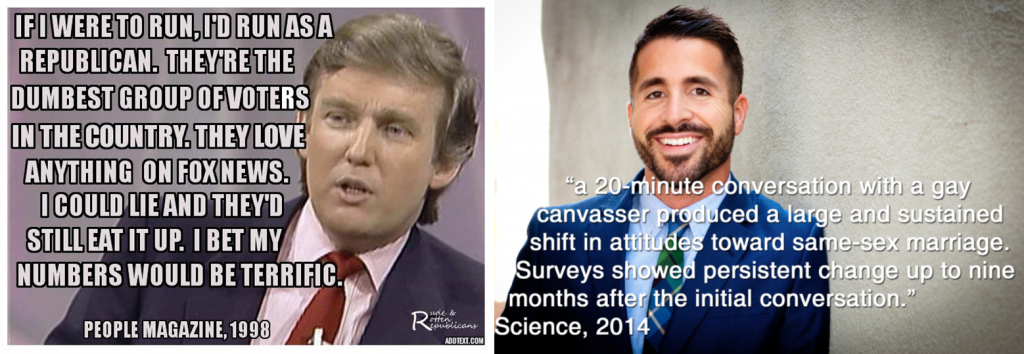

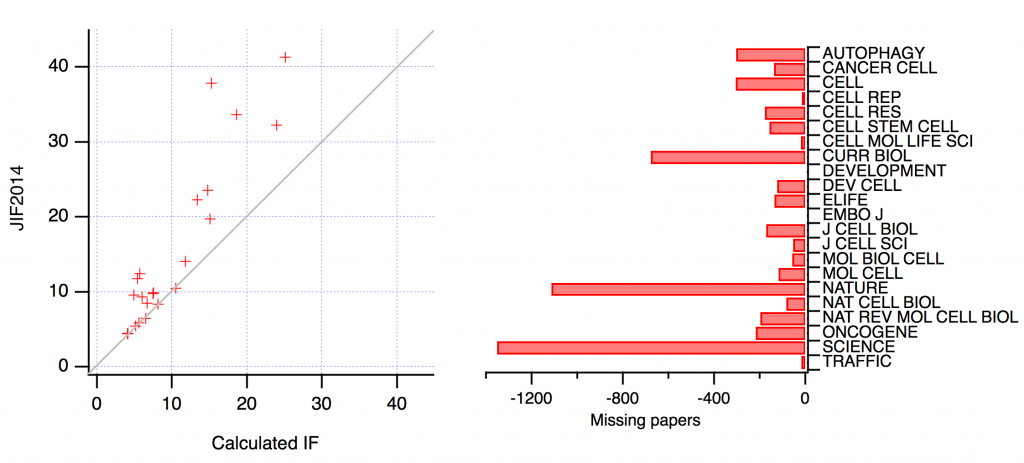

Over the last decade or two, there have been multiple accounts of how publishers have negotiated the impact factors of their journals with the “Institute for Scientific Information” (ISI), both before it was bought by Thomson Reuters and after. This is commonly done by negotiating the articles in the denominator.

tl;dr: It is a waste to spend more than the equivalent of US$100 in tax funds on a scholarly article. Collectively, the world’s public purse currently spends the equivalent of US$~10b every year on scholarly journal publishing. Dividing that by the roughly two million articles published annually, you arrive at an average cost per scholarly journal article of about US$5,000.

In Germany, the constitution guarantees academic freedom in article 5 as a basic civil right. The main German funder, the German Research Foundation (DFG), routinely points to this article of the German constitution when someone suggests they should follow the lead of NIH, Wellcome et al. with regard to mandates requiring open access (OA) to publications arising from research activities they fund.

Posting my reply to a review of our most recent grant proposal has sparked an online discussion both on Twitter and on Drugmonkey’s blog. The main direction the discussion took was what level of expertise to expect from the reviewers deciding over your grant proposal. This, of course, is highly dependent on the procedure by which the funding agency chooses the reviewers.

Update, Dec. 4, 2015: With the online discussion moving towards grantsmanship and the decision of what level of expertise to expect from a reviewer, I have written down some thoughts on this angle of the discussion. With more and more evaluations, assessments and quality control, the peer-review burden has skyrocketed in recent years.

Why our Open Data project worked, (and how Decorum can allay our fears of Open Data). I am honored to Guest Post on Björn’s blog and excited about the interest in our work from Björn’s response to Dorothy Bishop’s first post. As corresponding author on our paper, I will provide more context to our successful Open Data experience with Björn’s and Casey’s labs.

This is a response to Dorothy Bishop’s post “Who’s afraid of open data?”. After we had published a paper on how Drosophila strains that are referred to by the same name in the literature (Canton S), but came from different laboratories behaved completely different in a particular behavioral experiment, Casey Bergman from Manchester contacted me, asking if we shouldn’t sequence the genomes of these five fly strains to find out how they

Over the last few months, there has been a lot of talk about so-called “predatory publishers”, i.e., those corporations which publish journals, some or all of which purport to peer-review submitted articles, but publish articles for a fee without actual peer-review. The origin of the discussion can be traced to a list of such publishers hosted by librarian Jeffrey Beall.