We’ve traded keyword constraints for infinite potential, and created a massive usability crisis in the process.

We’ve traded keyword constraints for infinite potential, and created a massive usability crisis in the process.

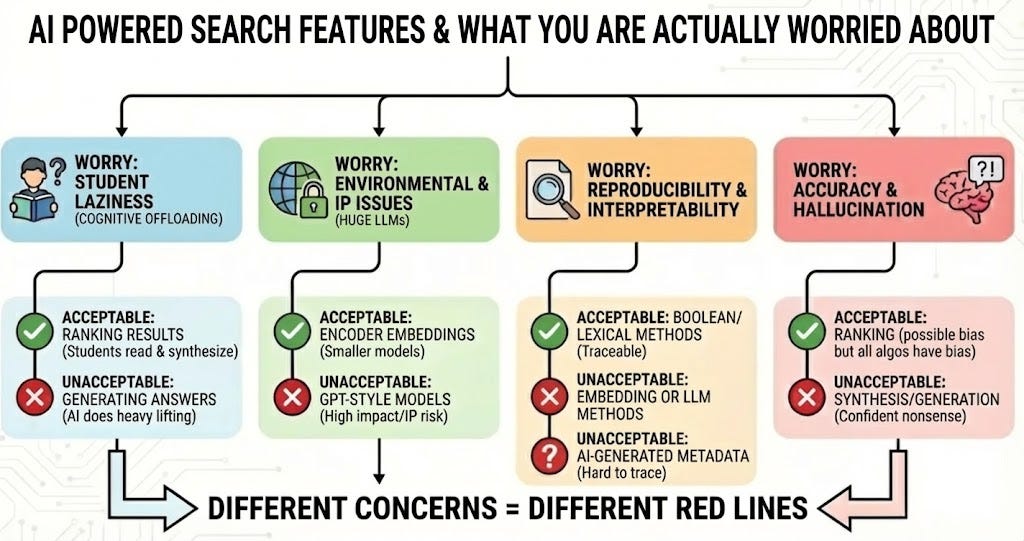

When we say "AI-powered search engine," we're conflating at least four different things—and your concerns about one may not apply to another.

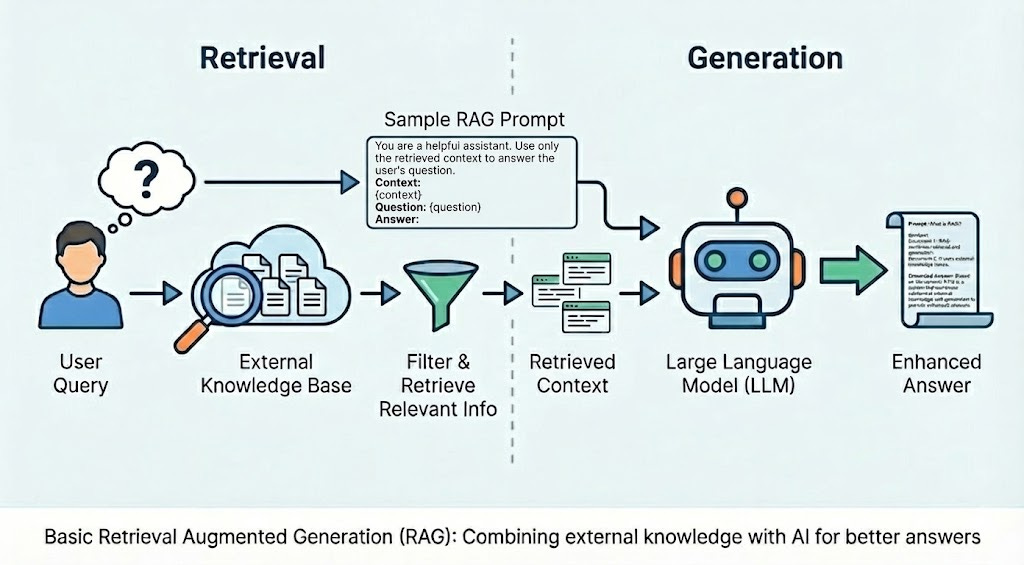

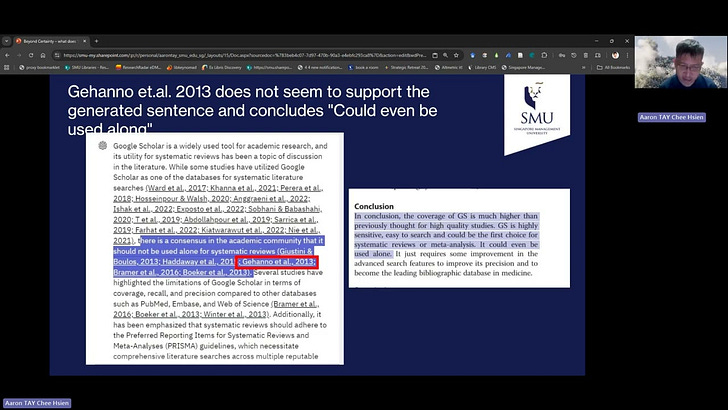

Ghost references existed long before LLMs. This post examines how Google Scholar's [CITATION] mechanism and web pollution may undermine RAG verification.

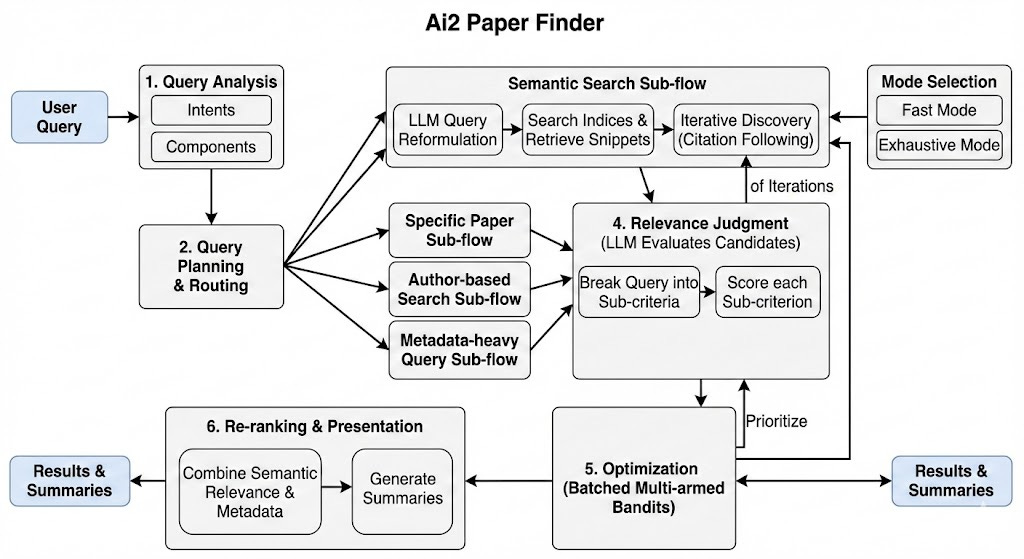

The Agentic Illusion: Most Academic Deep Research run fixed workflows and stumble when given unfamilar literature review tasks that do not fit them.

AI search tools like Elicit, Consensus, and Scite.ai have spent years racing to build centralised indexes of academic content— first by indexing the open content that is available and then trying to get publisher partnerships.

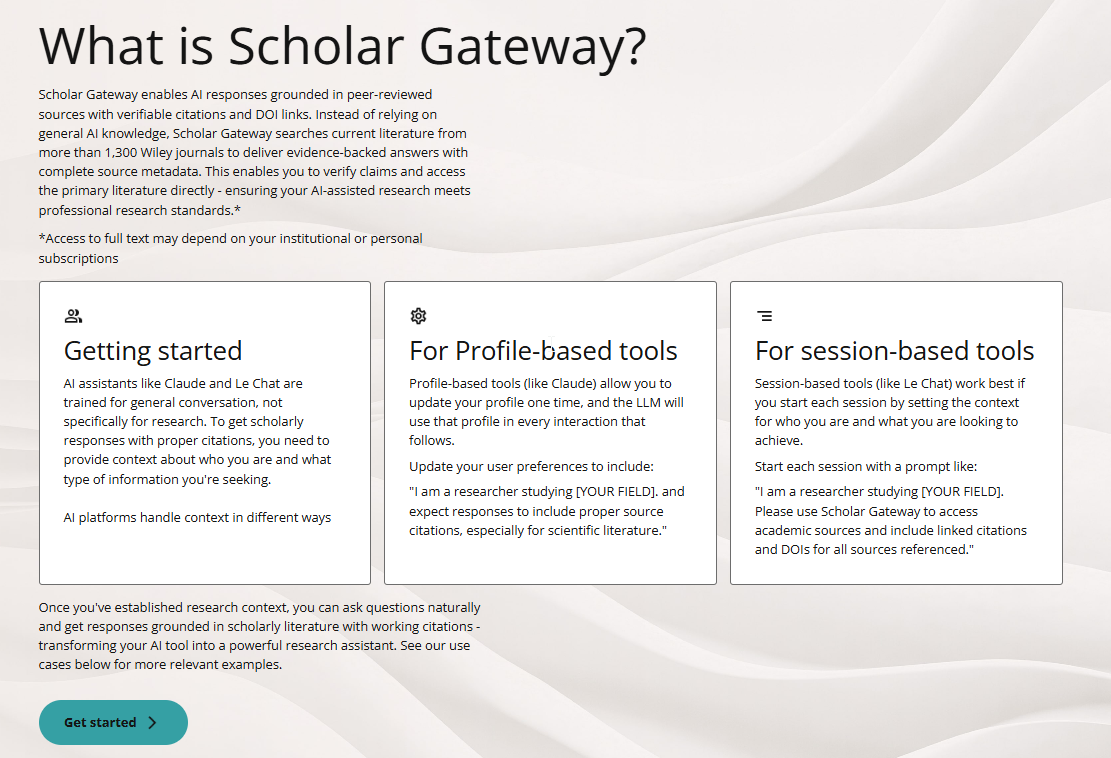

Generated by Nano-Banana Pro from text of this blog post As “AI-powered academic search engines” began their rise in 2023, the biggest question on everyone’s mind was: Where is Google Scholar? While Gemini Deep Research came and went, it relied primarily on the general web—similar to its rival, OpenAI Deep Research. Crucially, it failed to leverage Google’s greatest competitive advantages: the Google Scholar index and Google Books.

Academic librarians are increasingly being asked about AI-powered search tools that promise to revolutionize literature discovery. Consensus is one of the more prominent and earliest players in this space (alongside Elicit, Perplexity), positioning itself as a tool that can not only find relevant papers but assess the “consensus” of research on a topic.

TL;DR ResearchRabbit shipped its biggest update in years: a cleaner iterative “rabbit hole” flow, a more configurable citation graph, and an optional premium tier. The company now “ partners’ with Litmaps (others are reporting as acquired , which shows up in features and business model Free tier limits each search to <50 input papers / <5 authors and one project;

Recently, a librarian from a prestigious institution I met at a conference surprised me when he confessed that he and his colleagues were struggling to grasp the issues surrounding the impact of AI. But My talk helped clarify much of the fog around how to think about impact of AI on search. His confession wasn’t an isolated one. Many librarians I speak with admit they struggle to keep up with the blizzard of new AI-powered search engines.

Disclosure : I am on the program committee of FORCE 2026 and my institution will be hosting the event in Singapore. I am happy to answer any questions you might have. FORCE11’s annual conference, FORCE2026, will take place 3–5 June 2026 at Singapore Management University under the theme “To Go Far, Go Together: Advancing Scholarly Communication Across Boundaries and Disruptions.” Call for proposals are open!

A longer 30-minute version of a recorded talk I gave on 8 October 2025- Symposium: “Beyond Certainty - What does ‘Discovery’ mean in an open and artificially intelligent world?” A good catch-up post if you want a concise summary of my current views on “AI powered search” in academic discovery.Thanks for reading Aaron Tay's Musings about Librarianship!